Google is always ready to give you answers to every question you might have.

Have you wondered how that’s possible?

The tech giant controls 92% of the search engine market and receives 8.5 billion searches per day, with close to 90 billion visits in a month, according to Oberlo.

All of these start from collectively storing available information on the internet. Google calls this indexing.

The Google indexing process involves using a bot to scan various publicly available content on the internet and store them in a database that is made readily available to respond to searchers’ queries.

The indexing process isn’t always successful, as some web pages might knowingly or unknowingly block the bot from scanning their website.

If your website depends on Google traffic, your website needs to be indexable. In this article, we’ll cover Google indexing and how to solve common problems.

What Is Google Indexing?

Google indexing is the process of storing all crawled web pages.

Further definition by Google:

“A page is indexed by Google if it has been visited by the Google crawler ("Googlebot"), analyzed for content and meaning, and stored in the Google index. Indexed pages can be shown in Google Search results (if they follow Google's webmaster guidelines).

While most pages are crawled before indexing, Google may also index pages without access to their content (for example, if a page is blocked by a robots.txt directive).”

Googlebot makes this process possible; it automatically scans (crawls) billions of web pages daily, and stores them on Google’s database.

According to Google, the Googlebot is a generic name for a web crawler. This crawler aims to search the web for content that matches the user's search queries.

Before Google can index your page, it has to crawl your page content to see if it matches the requirements. To scan pages effectively, the Googlebot crawls. Crawling is the process of web crawlers following hyperlinks on the web to discover new or updated versions of content.

Through these hyperlinks comes the discovery of various pages, and the web crawler repeats the whole process.

How can you be sure Google is indexing your website?

How Do You Know If Google Indexes Your Page?

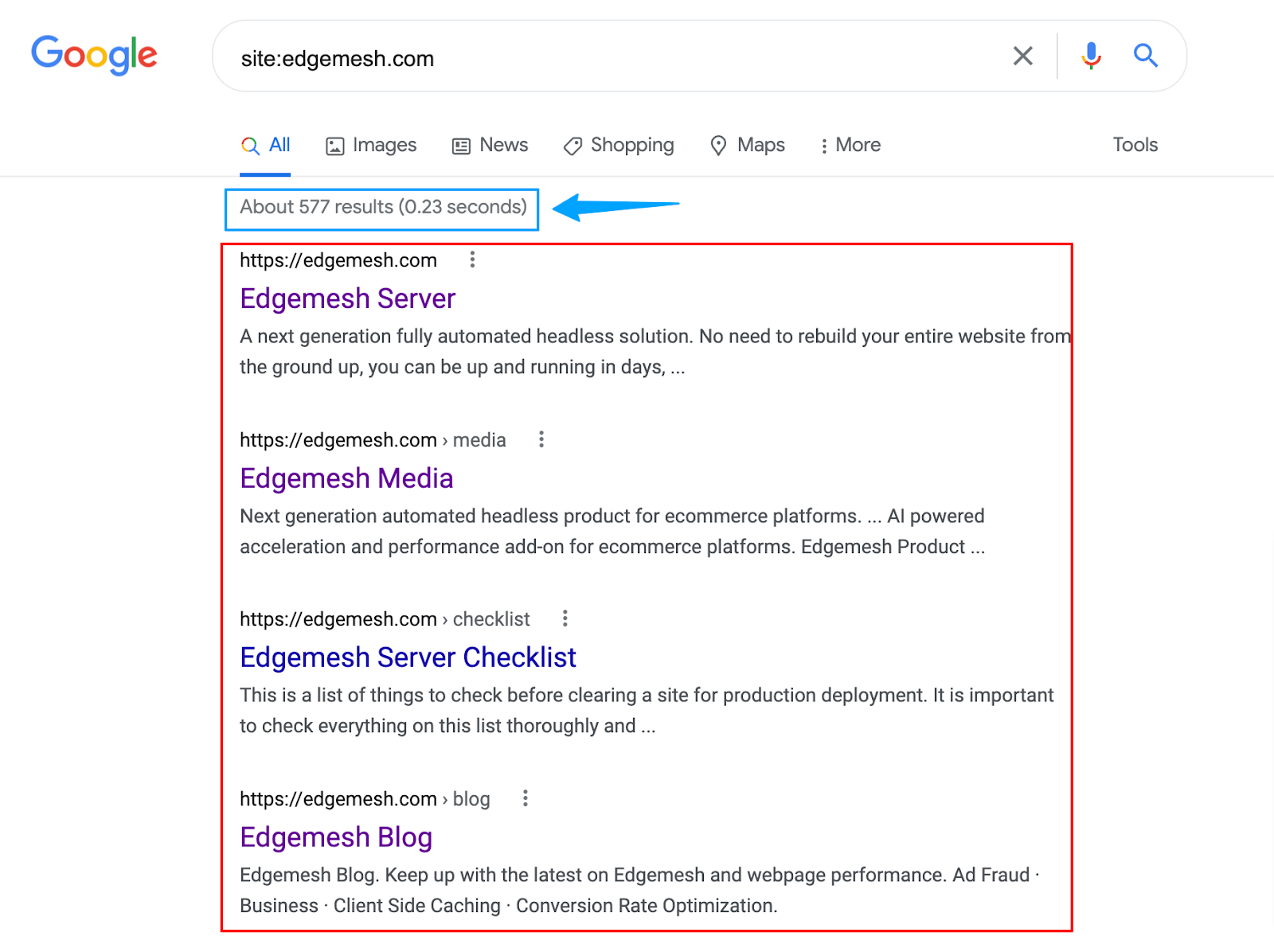

- Go to your web address bar and type in: https://www.google.com and click enter.

- On the page, type in site:yourwebsite.com (no spaces)

- Once it loads, you’ll see your website and other pages and the number of pages Google has indexed.

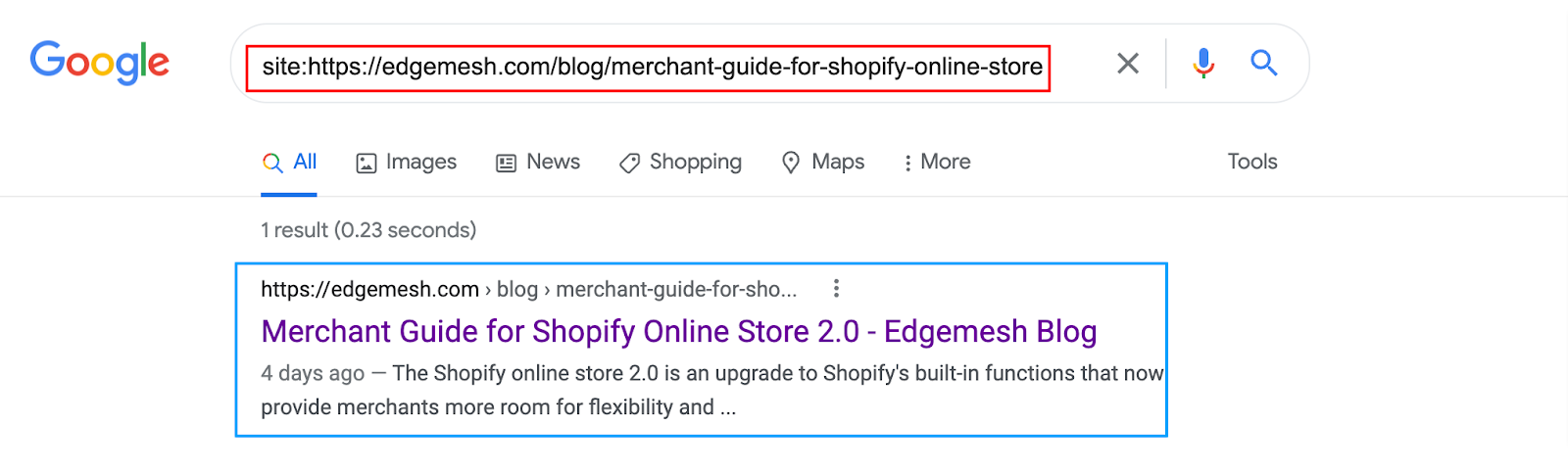

Let's say you recently uploaded new content. How do you know if it’s already indexed?

Here’s how:

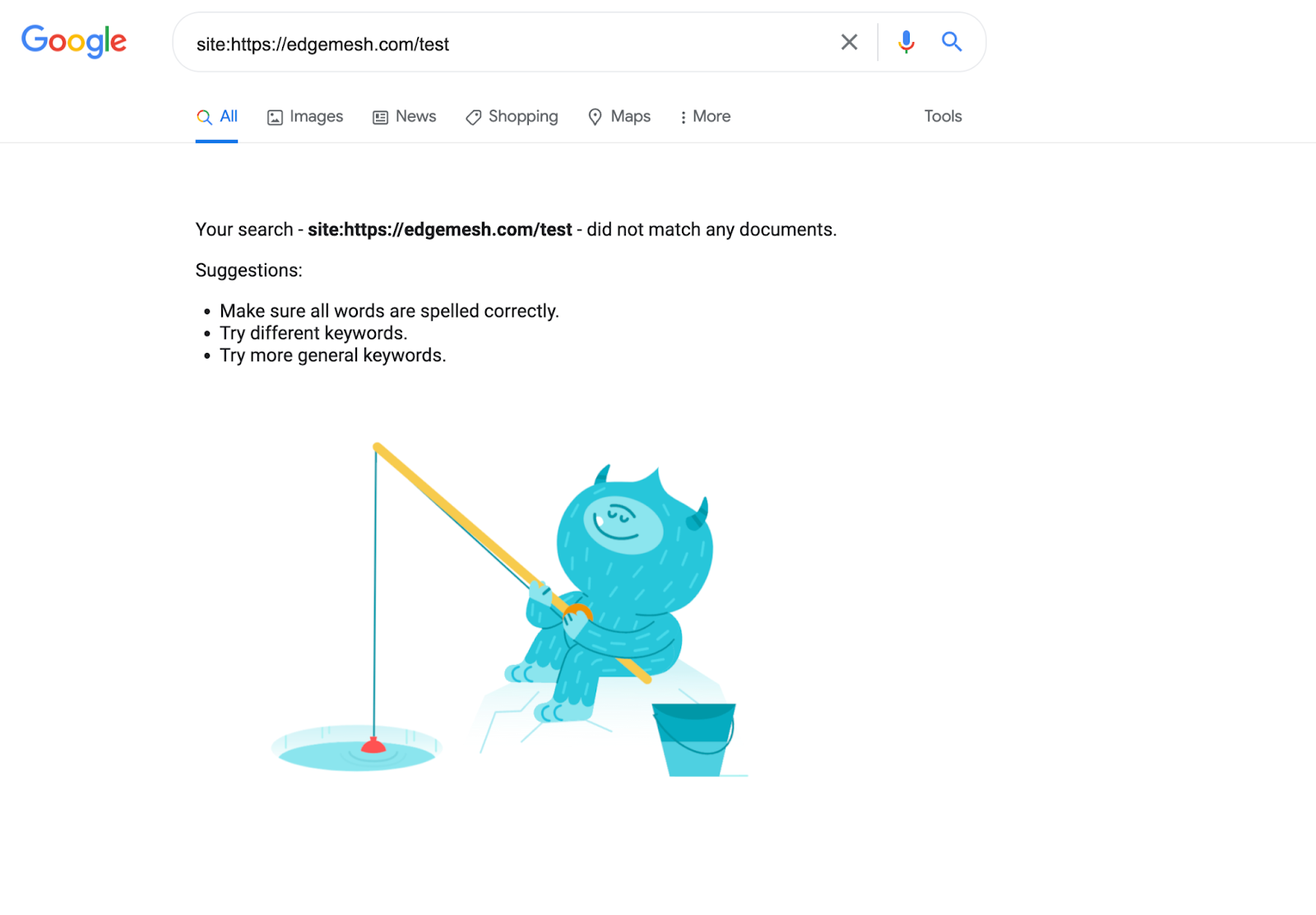

- Repeat the same process, but this time, change the address to site: specific URL.

If nothing shows up when you search for your content like this on Google, then it’s not indexed.

You can also use the Google Search Console for a more accurate result regarding the pages Google has indexed.

Here’s how to do it.

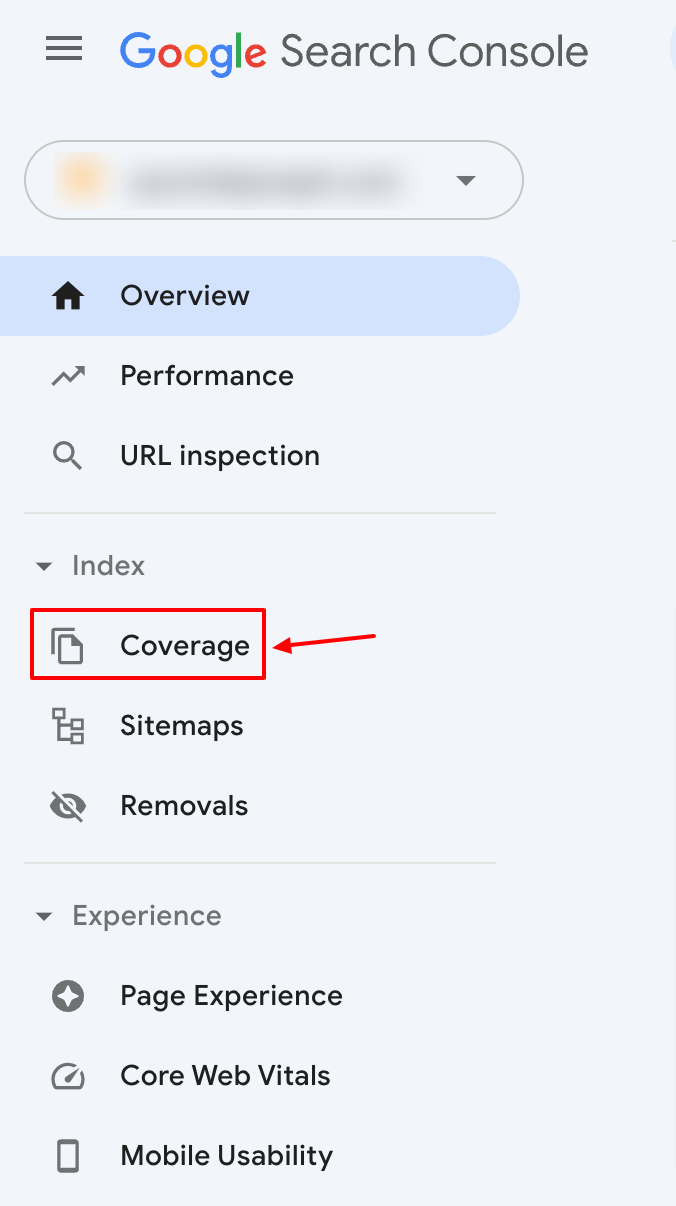

- Login and go to the dashboard of your Google Search Console.

- On the left sidebar of your dashboard, click the “index” section.

- Then click on the “coverage” option from the dropdown.

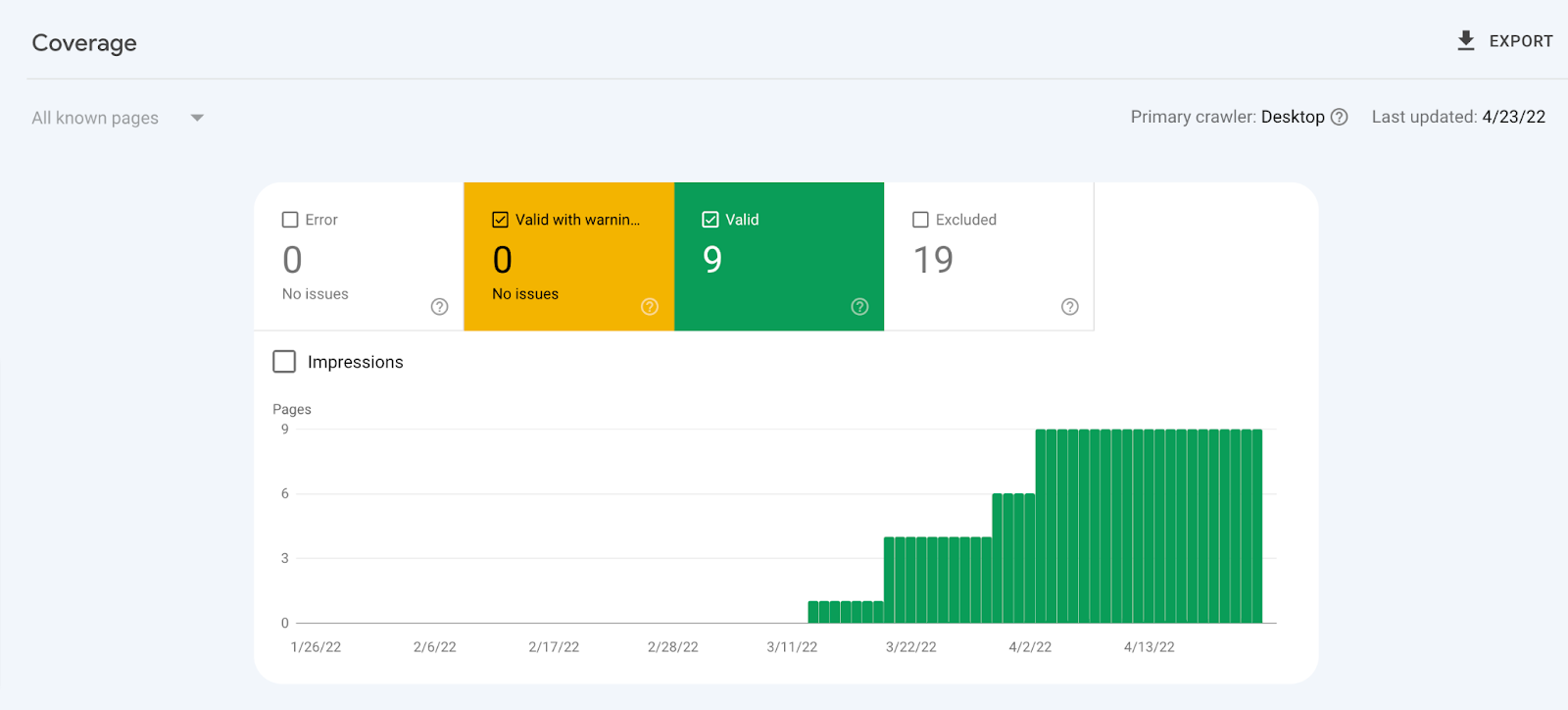

- Check for the number of valid pages, and take note of pages with warnings.

Note: If you find any of your pages have warnings, check them out. Also, if the number of your valid pages is zero or less than it should be, then Google hasn’t indexed your pages. Here, reasons Google may not be successfully indexing your pages.

Why Is Google Not Indexing Your Pages?

Google in its report, has said they can’t successfully index all the web pages on the internet.

A contributing factor to this is the crawl budget. The Crawl budget is the specified number of pages the Googlebot can crawl and index on any given day.

For small to medium website owners, the crawl budget isn’t something to worry about.

Although every website has its budget, Google is excellent at discovering your new pages.

However, some cases require you to pay attention to the crawl budget. Here are a few.

- You upload new content daily: Running an active website has its perks, but also its limits as it becomes hard for Google to keep up to date with your content. This makes it difficult to index all your pages. A good example is a news website like CNN, Foxnews, BBC, etc.

- Your website is too big: Websites with over 5,000 pages are difficult to index completely as they may have surpassed their allocated crawl budget limit.

A good example: If your website has 300,000 pages and the Googlebot can only crawl 1,500 pages daily. It’ll take the bot approximately 200 days (over 6 months) to finish crawling and indexing your pages in this instance.

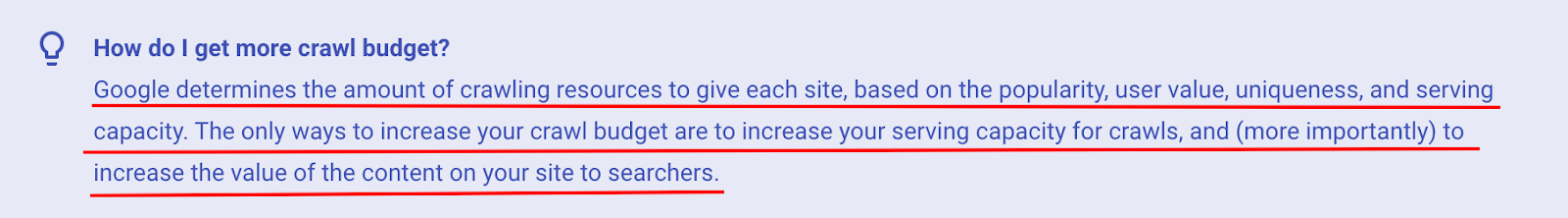

Suppose you add new pages to your existing ones—it’ll take even longer. So, how do you get a bigger crawl budget?

The short answer is that it depends on Google—and you.

Here’s how Google puts it.

So Google will always try to crawl your website if you allow them to. Not every indexing issue is caused by Google. Instead, a large part is caused by you when your web page doesn't follow Google’s instructions.

Below are 8 reasons Google isn’t indexing your website.

8 Reasons Google Isn’t Indexing Your Website

1. Your Website Has No History (New)

New websites often get no response from Google within the first 4 weeks, (and sometimes even longer) depending on their growth.

A reason for this: Googlebot hasn’t fully understood the context of the website, and indexing it on the database so as to serve search results becomes impossible.

If you find your website not getting picked up by Google in the first few months, don’t beat yourself up about it — it’s normal, it’s not you, it’s Google’s trust issues. Some of the contributing factors to these issues is the lack of quality backlinks.

For Google, backlinks are trust signals or votes of confidence from one website to another. With enough backlinks from quality sources pointing to your website, you gain authority. Then Google starts seeing you as someone they can crawl, index, and serve up on their SERPs.

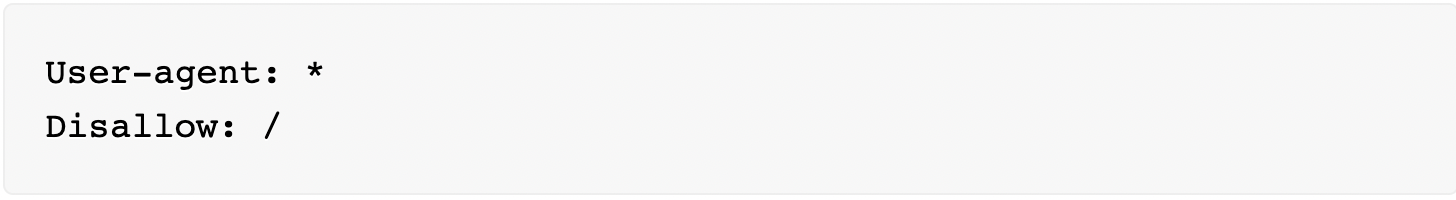

2. You’re Blocking The Crawler With Robots.txt

Have you recently installed a plugin on your website, and suddenly your pages aren’t getting indexed anymore? That factor, and several themes or apps you integrate with your website might have a robots.txt in their backend that blocks the Googlebot from crawling some of your pages.

The command responsible for this is:

The command follows the rule that anything encoded under the “/” function cannot be seen or crawled by search engine crawlers or robots in general.

3. You’re Using “No index” Tags

The “no index” tells search engine crawlers not to index a web page or show up on search results. If you include this tag in the <head> section of your web page, crawlers won’t be able to index your website and let it show up on SERPs.

Another way you might be hindering your indexing is by including the no index tag in your HTTP response header.

Note: Some crawlers might interpret your “no index” tag differently and would let some of your pages show up on SERPs.

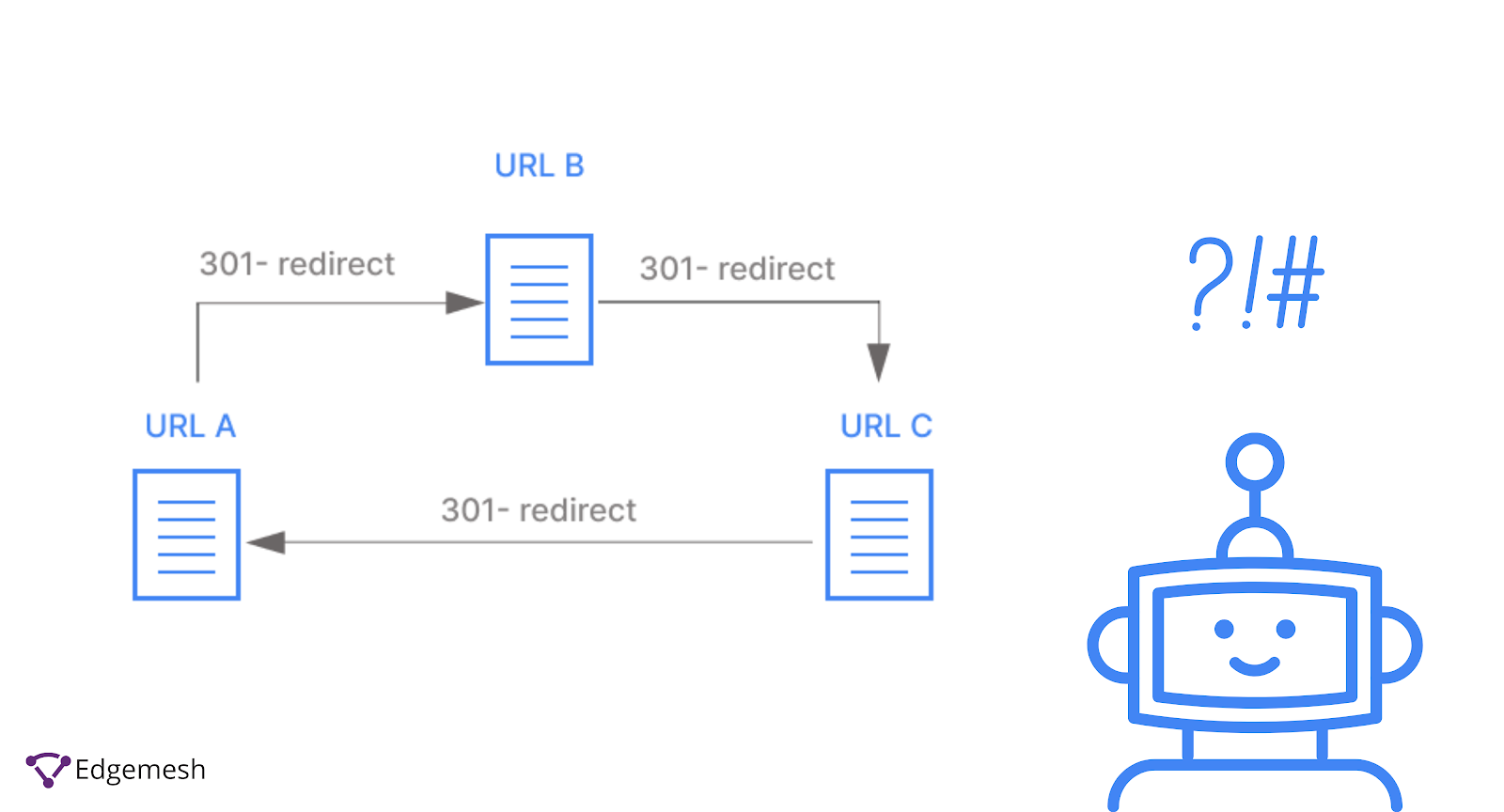

4. You Have Too Many Redirects (Redirect Loop)

The Redirect Loop occurs when there’s an infinite amount of URL redirects between two or more endpoints. For example, URL A redirects to B, then redirects to C, and then to A again—and repeats the whole process without stopping.

Like every other crawler, GoogleBot has a limit on the number of web pages it allows for redirects until it gets to the main content. If the crawler finds your redirect path is too long before getting to your main content or it’s infinite, it won’t index your website.

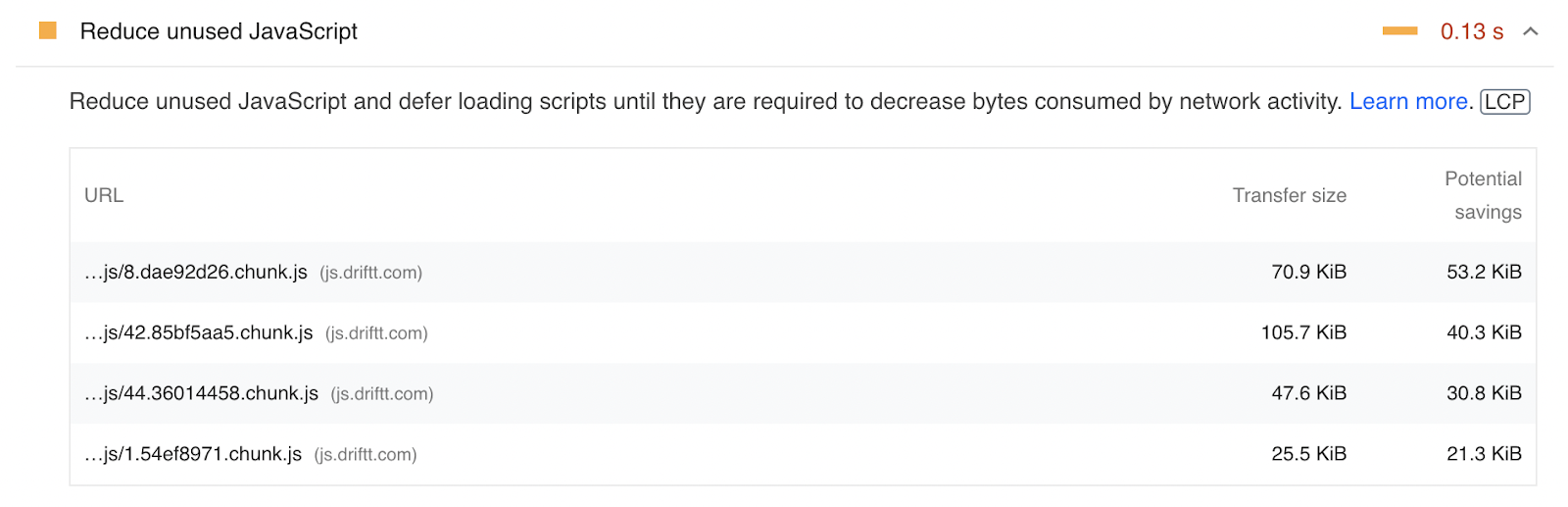

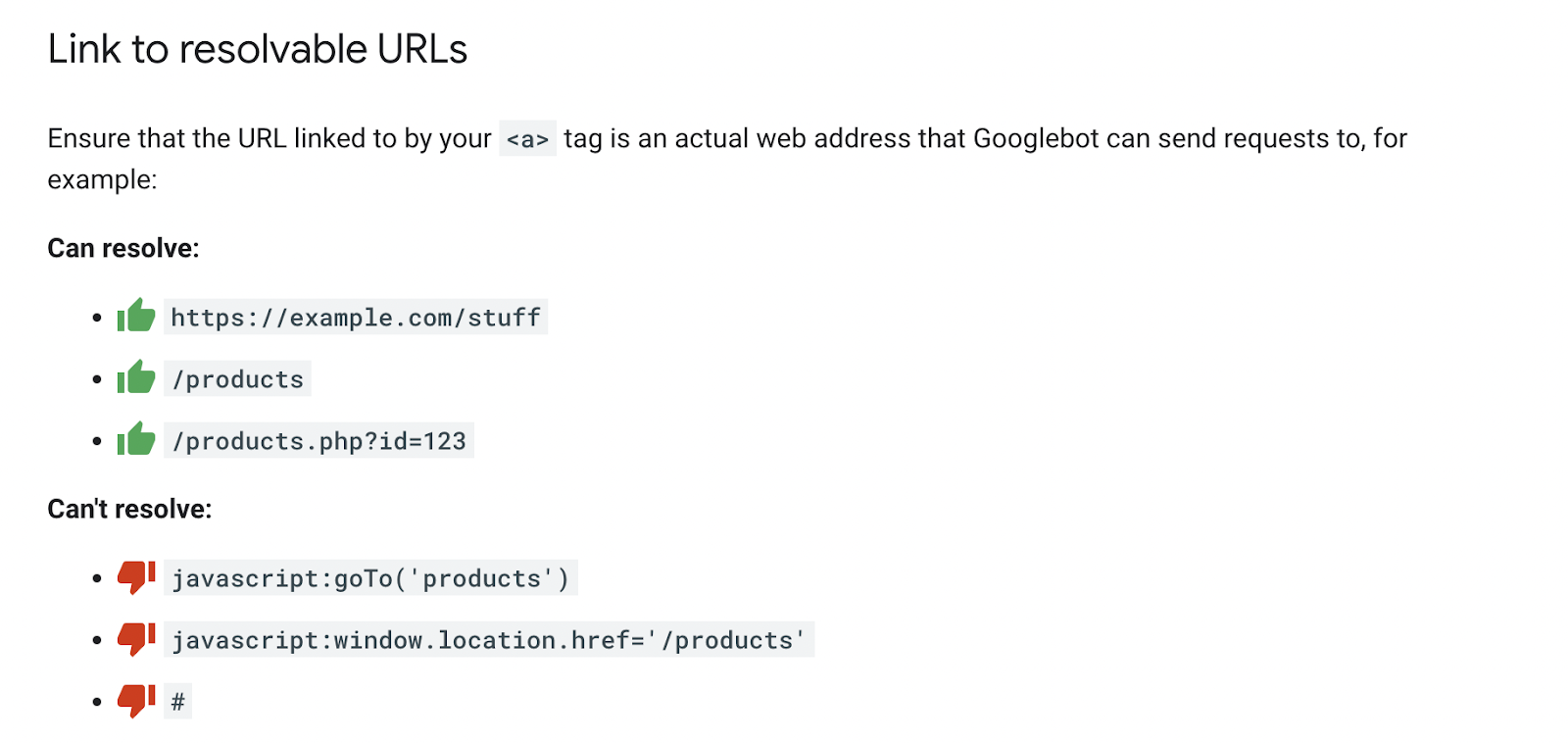

5. You’re Rendering Your Content With Javascript

Depending on how you choose to render your content, using Javascript isn’t exactly a deal breaker. SomeJavascript code techniques might inhibit bots from crawling and indexing your website, thus causing an issue.

Some of these include:

- Inlining Javascript

- Blocking Javascript to increase load time

- Injecting Javascript directly into your DOM (Document Object Model)

- Excess use of Javascript

These are all red flags for the Googlebot as it denies them access to the complete view of your website. Google prefers you render in HTML as it's easier to read, process, and store.

Note: Google is not against rendering your content with Javascript as long as you follow the guidelines to crawlable links.

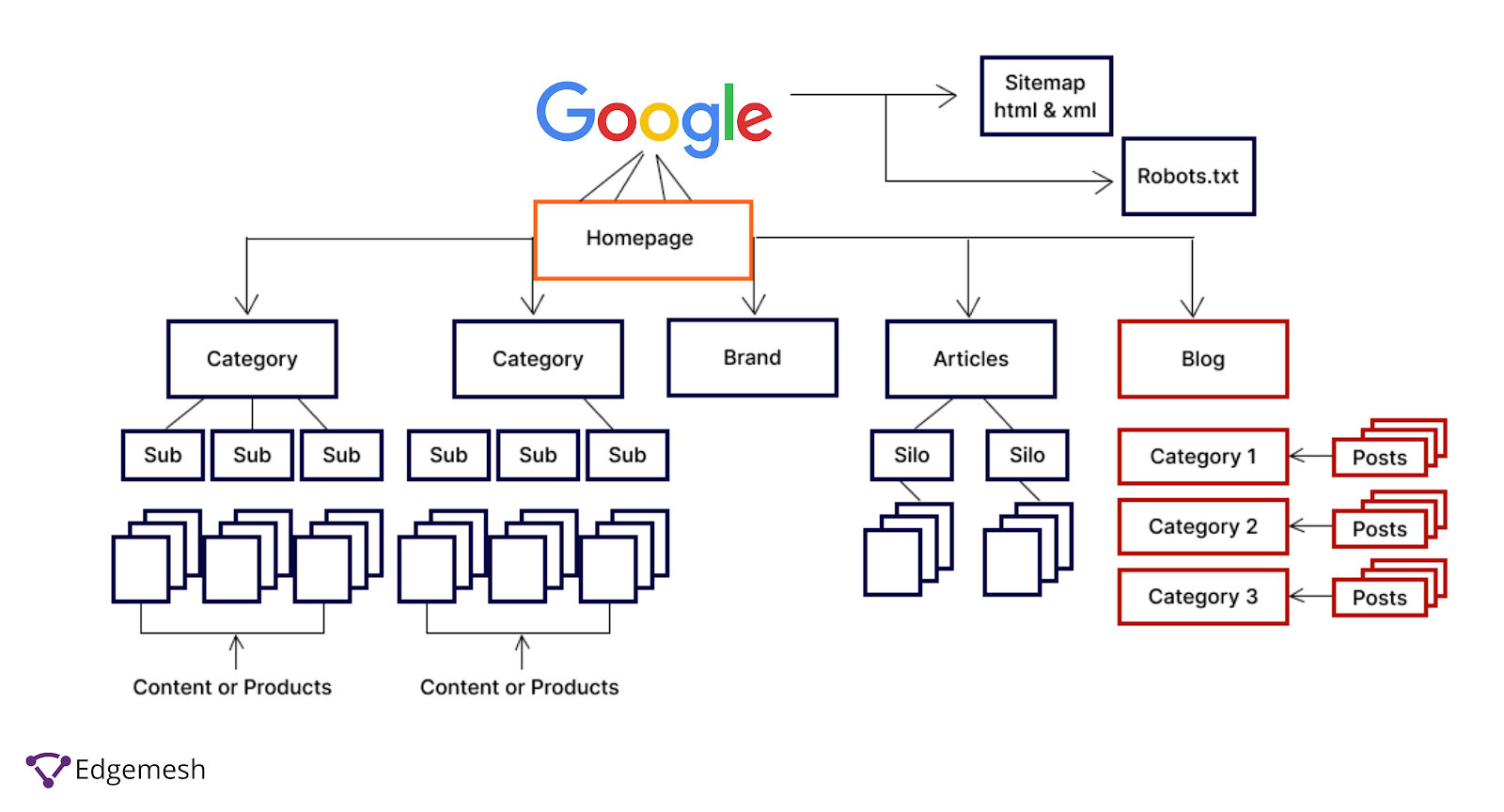

6. You Don’t Have A Sitemap

Look at a sitemap as the architectural plan of your house. Without it, your house wouldn’t exist. With this plan, you know where every intending structure goes in the house.

The same goes for your website—without a sitemap, you may as well be building in shadows without intending on letting anyone visit.

A sitemap is the blueprint of your website detailing the hierarchical structure of all the pages within your website, and how they link to each other.

With this structure, search engine bots can easily crawl and index your website by moving through the hyperlinks in the pages.

7. You’ve Been Penalized By Google

Penalties are never a good thing online or offline—but they’re necessary to discourage such acts from repeating. Google, in its own way, has a way of penalizing you if you go against its guidelines.

According to Hubspot,

“A Google penalty is a punishment against a website whose content conflicts with the marketing practices enforced by Google. This penalty can come as a result of an update to Google's ranking algorithm, or a manual review that suggests a web page used "black hat" SEO tactics.”

In other words, Google doesn’t tolerate any shady approaches to their marketing practices. If you fail to comply, you run the risk of Google de-indexing your website from your database.

Meanwhile, there are two types of Google penalties.

Algorithmic Penalties

Google releases new updates annually to its algorithms to better serve users. Some of the notable algorithm changes include Panda (2011), Hummingbird (2013), Penguin 4.0 (2016), Fred (2017), and Bert (2019).

With these updates comes new sets of guidelines for website owners like you. Going against them automatically means you violated the rules—making you an algorithmic penalty.

For example, the mobile-first indexing update was an algorithmic update and websites found with poor mobile experience were penalized as they saw most of their web pages drop in rank on SERPs.

Since these penalties are machine-enabled, there’s no way to check for them. All you can do is cross-check with your website to see if you’re following all the rules.

Manual Penalties

Google employees enforce these penalties after personally reviewing your website to see if it’s aligned with its guidelines. If you’re found guilty of any guideline violation, you'll either be served a warning or be de-indexed from Google’s database.

8. You Don’t Have Enough Quality Content

A big indexing problem you might have is the lack of good content on your website. In the search for content matching user queries, the bot evaluates the quality of your content to see if it matches the intended query. If it crawls your website, and after evaluating your content, determines it’s not a fit, you won’t be indexed.

How Can You Get Google To Index Your Web Pages?

The goal of Google indexing is to enable serving searchers the right answers to their queries. This means, if the crawler isn’t indexing your website, you won’t rank or show up to searchers when they enter their queries. Not having your pages indexed isn’t the end of the road, however. Below are 7 ways to get google to index your pages.

7 Ways To Get Google To Index Your Pages

1. Fix All Your “Orphan Pages”

Orphan pages, in this scenario, are your pages that have no internal pages pointing to them.

The basis of the Googlebot is to discover new pages through hyperlinks linking to internal and external content. If you have an orphan page, the bot won’t be able to discover the page, meaning, it won’t get crawled.

A good way to fix orphan pages is to find relevant crawled pages on your website and create an internal link from them pointing back to your orphan page.

This way, when the bots crawl your website for new or updated content, they can follow the link from the previously crawled page to the new one.

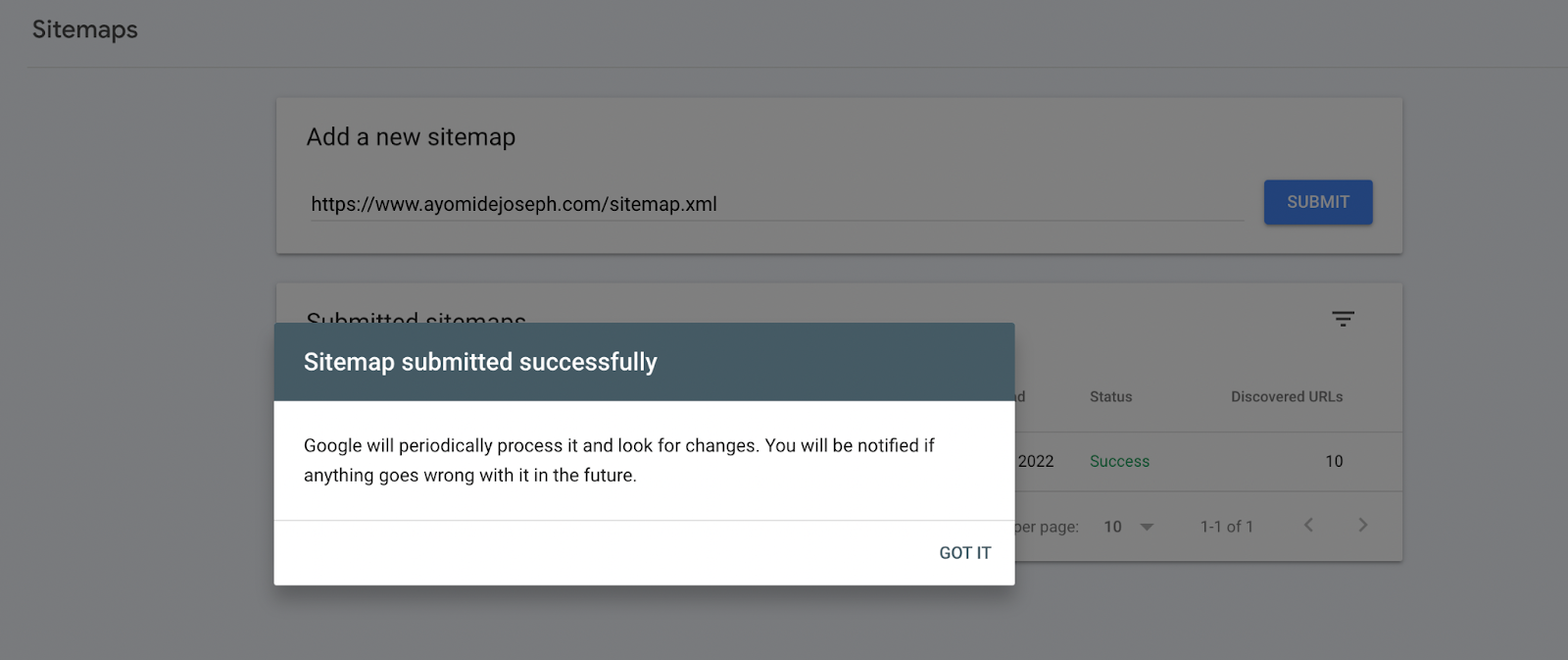

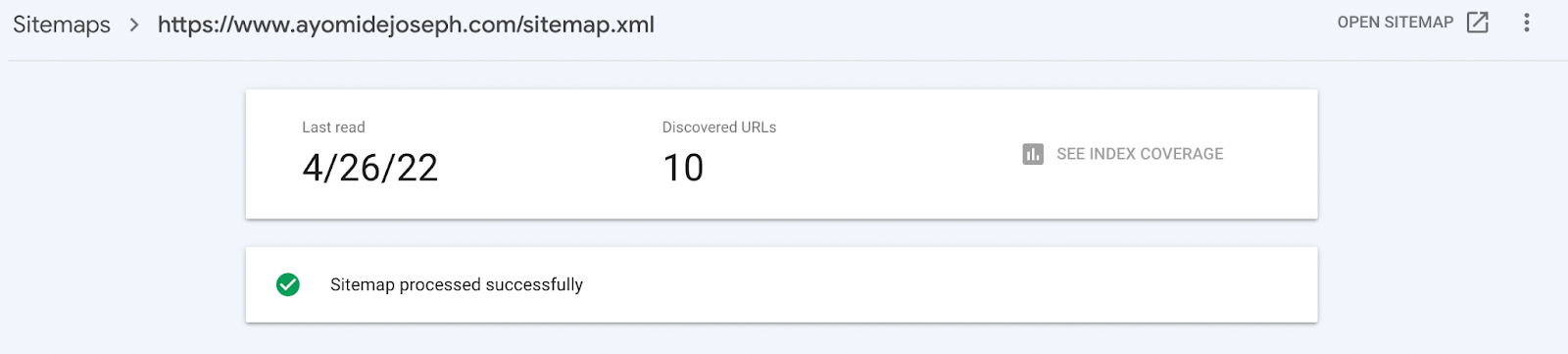

2. Submit Your Sitemap to Google

A staggering 4.4 million blog posts go live every day across all platforms. Unfortunately, this means Google can’t discover every new page being published. If you’re not getting your website indexed automatically, it’s best to generate your sitemap and submit to the Google Search Console.

Follow these instructions to submit your sitemap:

- Login to your Google Search Console.

- Select your website from the property drop down on the left sidebar if you run more than one website.

- Scroll down to the index section and from the drop down, click on “sitemaps.”

- Paste your sitemap into the address bar.

- Click on “submit” to submit your sitemap.

- Here’s the notification you’ll get after submitting your sitemap.

It’ll take a couple of hours, perhaps months, to successfully index your website depending on your website’s size.

3. Structure Your SEO Tags Correctly

Your tags play a crucial role in determining if the googlebot will crawl and index your page or not. It’s not recommended to let search engines index all your pages to avoid the eyes of unauthorized third parties.

So, for example, you can’t allow search engines to index your admin or customer panel.

Use the right SEO tags, such as the X-robots and meta tags, to tell the bots not to crawl or index your website. However, putting these tags in the wrong pages can affect your visibility.

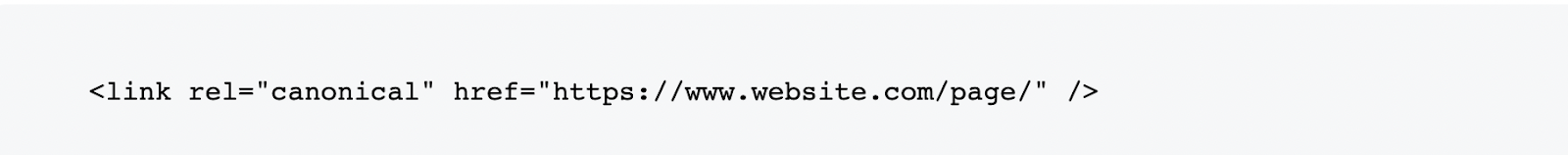

Another SEO tag is the canonical tag that tells crawlers the preferred version of a content.

It looks like this:

Let’s say you have two identical pages on your website, but you don’t want both competing on SERPs. You can use the canonical tag to indicate the preferred version of the page you’ll want indexed. This way, the bots know to ignore indexing the duplicate content.

4. Build Quality Backlinks To Your Pages

There’s a high probability Google will ignore you if your pages have few to no backlinks from other sources. We advise you to start sourcing for quality backlinks through guest posting or white-hat link exchanges to get your pages indexed successfully. You can also try using directories to your advantage if you’re just starting out—but it shouldn’t be a long-term thing.

5. Optimize Your Page To Fit Your Crawl Budget

There’s no exact crawl budget for every website and Google isn’t going to reveal it to you either.

Once you’ve published enough pages on your website and it’s getting harder to have them all indexed, you start to estimate your crawl budget. It’s advisable to optimize your website’s crawl budget by prioritizing quality content instead of wasting it on low-value pages, as Google calls them.

Google further went on to say:

“Wasting server resources on pages like these will drain crawl activity from pages that do actually have value, which may cause a significant delay in discovering great content on a site.”

Some contributing factors that drain your crawl budget include:

- Soft error pages

- Hacked pages

- Infinite spaces and proxies

- Low quality and spam content

- Faceted navigation and session identifiers

- On-site duplicate content

Fixing the issues above will improve your crawl budget and provide you with a higher chance of getting all your pages indexed without issues.

6. Switch To Mobile-First Indexing

Since Google released the mobile-first indexing update, websites with poor mobile device compatibility have recorded several indexing issues.

Two of the common issues include:

Mobile crawling issues

The host server of some websites are handling the request from the mobile crawler version of the googlebot differently. This is making crawling new pages on these websites difficult.

Mobile Page Content Issues

If the content on the mobile version of your website is different from that of the desktop, Google will not index it to reduce the chances of duplicate or false content. To solve any of these issues, you simply have to make your website mobile-friendly. For that, you can work on improving your mobile page speed, and responsiveness.

To confirm if your page is mobile-friendly, follow these instructions:

- Go to the mobile-friendly test tool

- Input your website’s url

- Click on “Test URL”

- You can also click on “view tested page” to see how your page renders on mobile.

7. Request For Indexing Manually

If you checked all the boxes and you see you’re doing it right but Google isn’t indexing your website, then manually requesting for indexing is your only option. Here’s how you can do it.

- First, go to your Google Search Console.

- On the left sidebar, click on “URL inspection.”

If the page is already indexed you’ll receive a message like this:

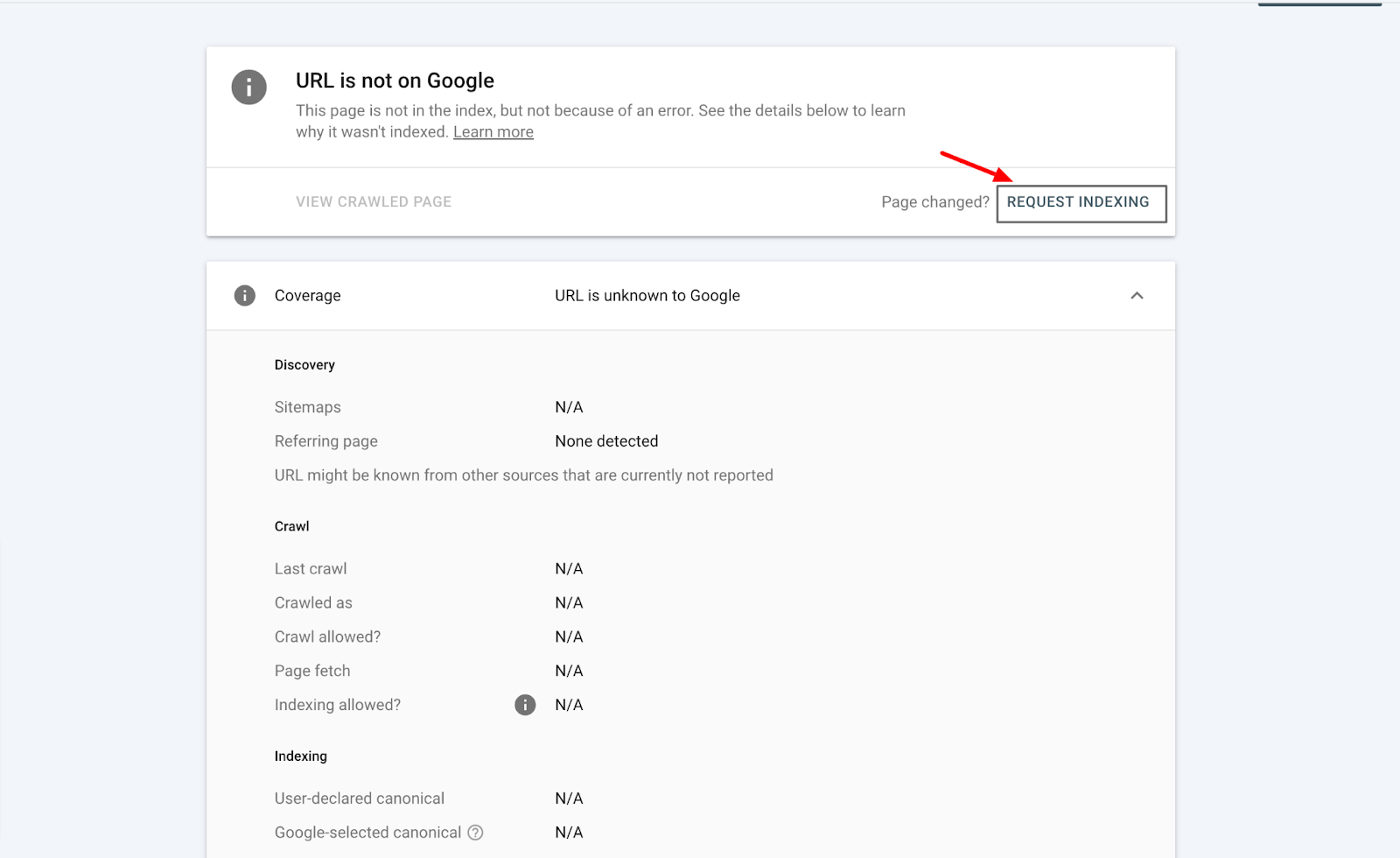

If it’s not, you’ll get a notification like the below allowing you to request indexing for that URL.

Note: Google won’t index all the pages you submit if they don’t pass the live test.

“If the page passes a quick check to test for immediate indexing errors, it will be submitted to the indexing queue. You cannot request indexing if the page is considered to be non-indexable in the live test.”

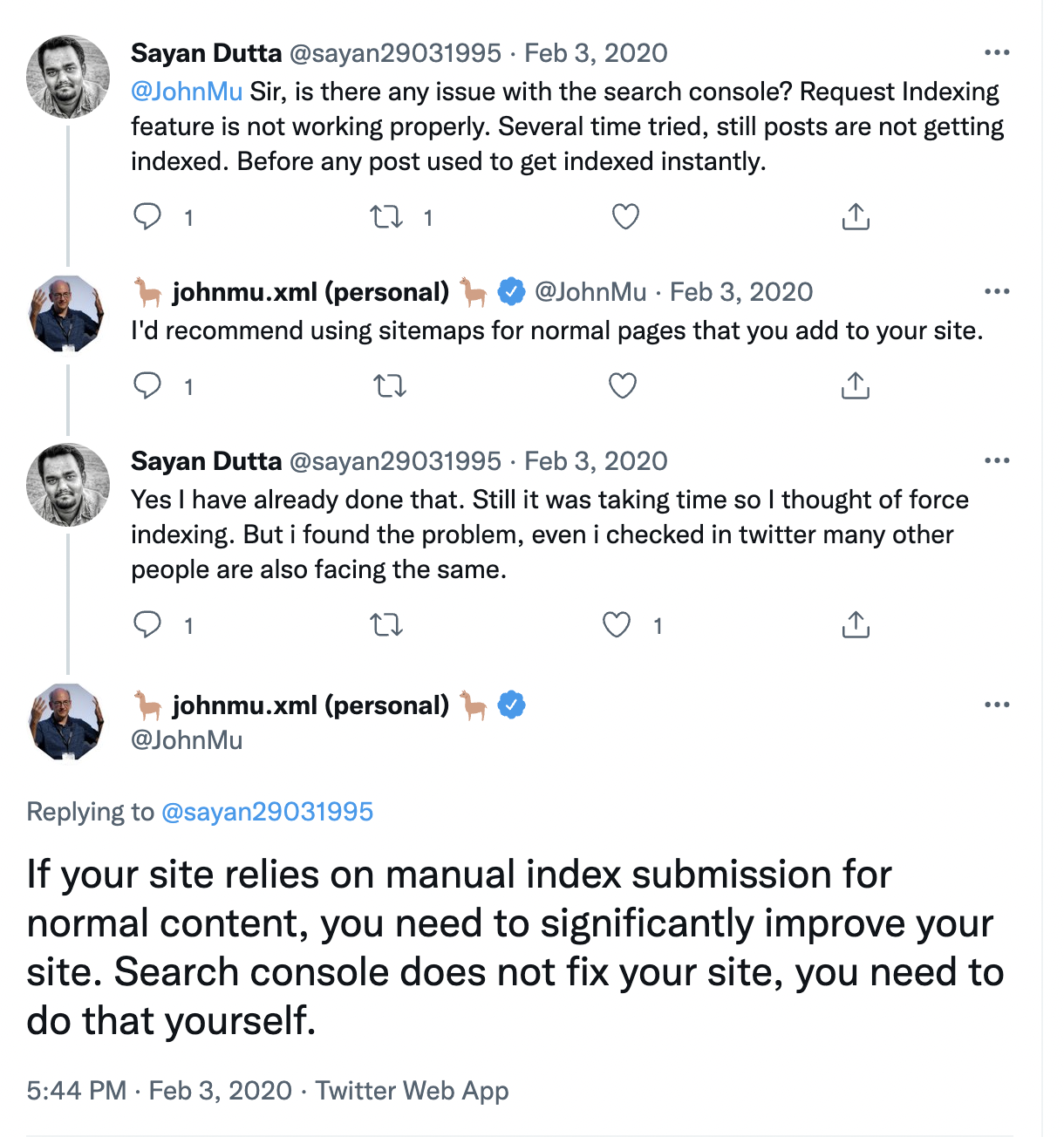

Also, as much as indexing your pages manually guarantees you a higher chance of getting indexed, it shouldn’t be a common thing you do.

According to John Mueller:

“If your site relies on manual index submission for normal content, you need to significantly improve your site. Search console does not fix your site, you need to do that yourself.”

After Indexing Your Page, What Next?

There’s a common misconception between Google indexing and ranking. Unfortunately, indexing doesn’t mean you’ll rank on SERPs, but it gives you a fighting chance to claim the spot on the top pages.

What goes into ranking doesn’t simply involve the mere indexing of your content. Instead, it spans across 7 core Google ranking factors that influence your SEO.

Indexing your page is the first step of many when it comes to building your website for potential growth.

Looking to Improve your website performance today? Start using Edgemesh in just 5 Minutes.

.png)